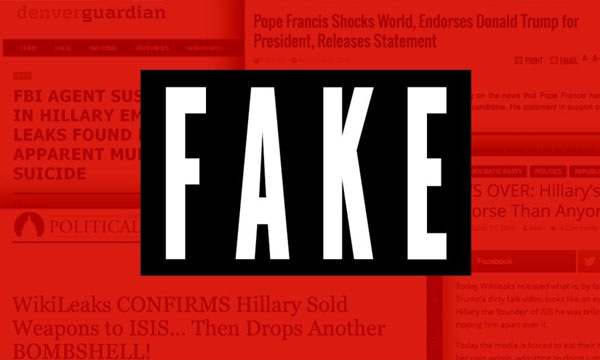

In an era replete with fake news stories, you might expect video evidence to provide a clearer picture of the truth.

You’d be wrong, according to Google engineer Supasorn Suwajanakorn — who has developed a tool which, fed with the right input, can create a realistic fake video that mimics the way a person talks by closely observing existing footage of their mouth and teeth to create the perfect lip-sync.

Like any technology, it has great potential for both good and mischief.

Suwajanakorn is therefore also working with the AI Foundation on a ‘Reality Defender’ app that would run automatically in web browsers to spot and flag fake pictures or videos.

“I let a computer watch 14 hours of pure Obama video, and synthesized him talking,” Suwajanakorn said while sharing his shockingly convincing work at the TED Conference in Vancouver on Wednesday.

Such technology could be used to create virtual versions of those who have passed – grandparents could be asked for advice; actors returned to the screen; great teachers give lessons, or authors read their works aloud, according to Suwajanakorn.

He noted a New Dimensions in Testimony project that lets people have conversations with holograms of Holocaust survivors.

“These results seemed intriguing, but at the same time troubling; it concerns me, the potential for misuse,” he said.

“So, I am also working on counter-measure technology to detect fake images and video.”

He worried, for example, that war could be triggered by a bogus video of a world leader announcing a nuclear strike.

Step forward ‘Reality Defender’, which will automatically scan for manipulated pictures or video, as well as allow users to report apparent fakes to use the power of the crowd to bolster the defense.

“Video manipulation will be used in malicious ways unless counter-measures are in place,” he told AFP.

“We have to make it very risky and cost-ineffective.”

While writing fake news may be cheap and easy, it is tough to manipulate video without any traces, according to Suwajanakorn.

Videos, by design, are streams of thousands of images, each of which would have to be perfected in a fake, he reasoned.

“There is a long way to go before we can effectively model people,” said Suwajanakorn, whose work in the field stems from his time as a student at the University of Washington.

“We have to be very careful; we don’t want it to be in the wrong hands.”