Apple believes that human privacy is a basic right of everyone and it must not be invaded under any circumstances. People are allowed to be private with their belongings as much as they want to. Apple even refused to provide access to the iPhone of a dead terrorist, due to this privacy emphasis.

The company has clarified many times about them not being willing to reduce privacy. They even regarded this once by saying ‘What happens in your iPhone stays in your iPhone’. There was even a famous video released to users that showed people giving away their personal info to complete strangers. This includes credit card info as well as their personal details.

Read More: Telegram Releases Yet Another Update Focused on User Privacy

Apple And Privacy

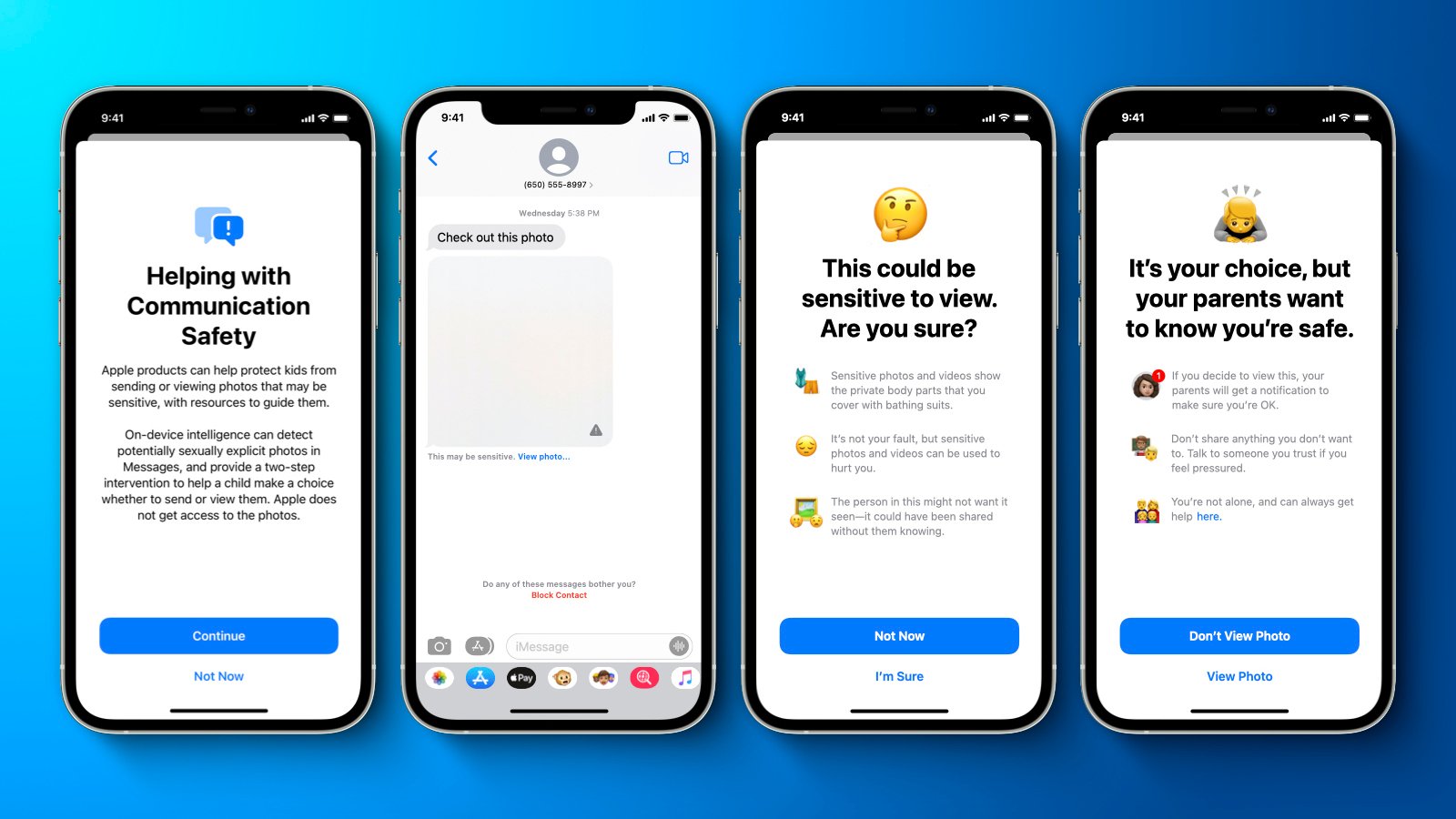

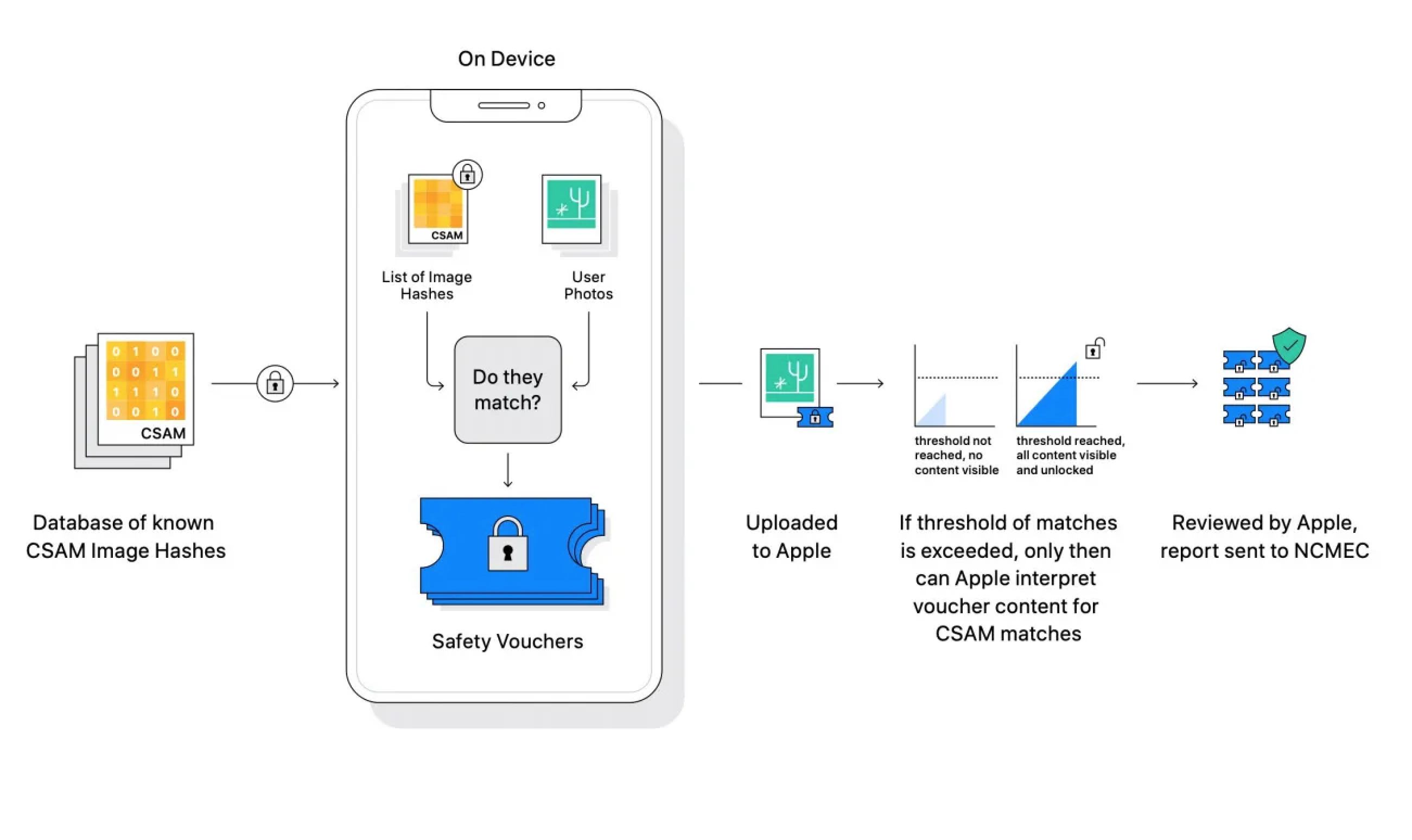

Just a while back, the company introduced a new piece of technology. They released an end-to-side tool that will detect images and content related to ‘Child Sexual Abuse Content’. The tool will detect the media while the user is sending on receiving it. To make sure the tool accurately detects it, Apple has been provided images through National Center for missing and exploited children (NCMEC) and other child security organizations.

If a photo is explicit in nature, the photo will become blurry and a prompt will be made to ask if the child wants to continue browsing. If they do still intend to do so, the app will notify the parents immediately. CSAM is a very serious concern and it must be addressed as such. This is Apple’s attempt to make sure such illegal activity is limited as much as possible. The messages app will use on-device machine learning to warn about sensitive content.

A Backdoor Into The System

CSAM detection will also help provide a lot of important information to law enforcement. All of these updates are coming to Apple devices. However, the tool that is working end-to-side might be compromising the integrity of the end-to-end encryption that the devices currently hold. It bypasses the entire end-to-end system and can be considered as an obvious backdoor. Not only is this unpredictable, but it also poses serious security and safety risks.

Read More: iOS 15 Is Going To Include A Brilliant New Privacy Feature

We hope that the concern for privacy does not stay for long and Apple manages to work a solution for both things. CSAM is something that must be addressed and prevented, all the while also keeping end-to-end encryption steady. Let us see how the story evolves as the update is released.

Stay tuned for more from Brandsynario!