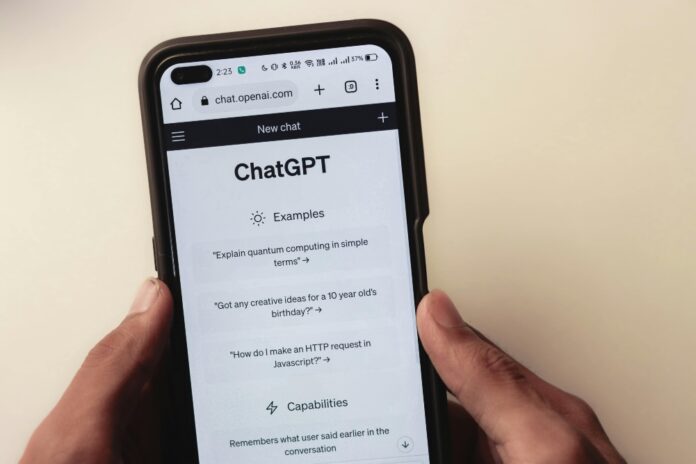

In a strange twist of events, the super AI chatbot, ChatGPT, was recently observed to say that it would not mention the word “David Mayer.” This unusual limit has sparked curiosity and speculation among users and aficionados of AI.

Found Out by Reddit

The issue first came to light on Reddit, where users reported that ChatGPT consistently avoided using the name, regardless of the prompt’s context. Whether it was a simple request to write a sentence with the name or a complex creative writing prompt, the AI would abruptly halt its response.

The response from ChatGPT to direct questions about the limitation was equally enigmatic. The AI reported that the name was too closely matched to a sensitive or flagged entity, which might relate to public figures, brands, or special content policies. This explanation was technically correct and almost satisfied the curiosity of so many users.

Read More: ChatGPT Gets a Voice: OpenAI’s Latest Feature Explained

The Theory Behind It

There have been theories on why it is so; some believe that “David Mayer” might have a connection to an individual or entity with Rothschild sensitive information or copyright. Others propose that the name might be actuating a specific filter or moderation rule within the system.

Although the reason is still unknown, this event shows how hard it is to interleave AI and human language. It depicts the difficulty of creating machines that could understand a variety of prompts accurately while dealing with potentially sensitive or ambiguous information.

As AI continues to grow, these limitations must be addressed so that such systems can be used ethically and responsibly. By understanding why these restrictions exist and tailoring the capabilities of such an AI, developers could work towards more robust and versatile AI models.

Stay tuned to Brandsynario for the latest news and updates