Deepfakes are increasingly becoming a problem across every industry, with the risk of consumer trust being eroded. As the rapid evolution of deepfake generation continues, producing some exceptionally convincing deepfakes, the average netizen may struggle to keep up. Here are five ways to spot these digital deceptions.

1. Pay Attention to the Face

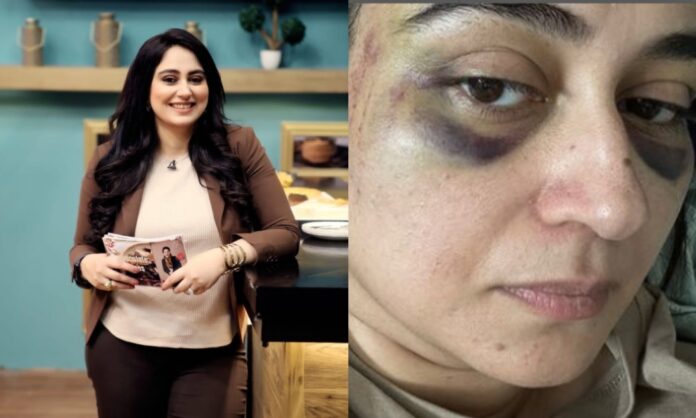

Deepfakes typically involve facial transformations, with alternate faces transposed onto another body. Despite advances in technology, fully photorealistic reproduction of complex human skin and the minute muscular motions around our eyes, noses, and mouths remains extremely challenging. According to Christopher Cemper, founder of AIPRM, examining fine skin textures and facial details is crucial. Look for inconsistencies in skin tone, unnatural lighting, or subtle distortions around the eyes and mouth.

2. It’s All in the Hands

Hand anatomy is complex, often stumping even the best artists, and AI image generators are notorious for their inability to produce realistic hands. Look for hands with missing or extra fingers, unnatural poses, or unrealistic shapes, especially in images of hands performing fine motor tasks. A lack of spatial understanding in some AI models can result in these anomalies, making hands a good indicator of deepfakes.

3. Look at Lip-Syncing

In videos, deepfakes may struggle with accurate synchronisation. Check for lip-syncing errors, where the audio and the movement of the lips do not match. Other audio anomalies, such as unnatural pauses, glitches, or stilted speech, can also indicate a deepfake. These errors are often subtle but noticeable upon close inspection.

4. Try an AI Image Detector

Numerous free AI image detectors, such as Everypixel Aesthetics and Illuminarty, use neural networks to analyse images for inconsistencies indicative of AI generation. Jamie Moles, senior technical manager at ExtraHop, notes that detection algorithms, like those in general adversarial networks (GANs), scan where the digital overlay connects to the actual face being masked. These tools report high accuracy in catching deepfakes, though the cat-and-mouse game continues as GANs also train AI models to avoid detection.

5. Check the Source

Compare the video with known source material, such as other videos or images of the same person, looking for discrepancies in appearance, voice, and behavior. If the source cannot easily be found, check the metadata of the file. Metadata, automatically inserted into images or videos during their creation, can offer valuable insights into the origin of a video. However, relying solely on metadata is not foolproof, as it can be easily manipulated or erased through editing processes.

In this landscape of advancing technology, employing AI image detectors and scrutinising source material become imperative. By looking beyond the face and examining the intricacies of AI-generated deception, we can better navigate the digital world and maintain trust in the media we consume.

Stay tuned to Brandsynario for latest news and updates.